Note: This post was updated in October 2025 and reflects currently available pricing information, including estimates by industry analysts where direct pricing is not available from the vendor website.

If you’re looking to deploy virtual machines for your next AI training or inference project, you may have noticed how the cloud GPU market is a wild place at the moment.

Both hyperscalers and new AI-focused startups compete fiercely to get access to the latest premium cloud GPUs. The cost of GPU instances and clusters varies greatly between different service providers. It’s not easy to get a clear view of your options. That’s why we’re here to help.

In this article, we go through some of the common options for deploying cloud GPU instances, including Google Cloud Platform, Amazon AWS, Microsoft Azure, and other NeoClouds. We also assess several of the best-known cloud computing providers—Nebius, RunPod, Lambda, Coreweave, OVH, Scaleway, and Paperspace—and introduce you to DataCrunch as a production-grade, affordable option to consider.

Cloud GPU Hyperscaler Pricing

Many AI developers look first to hyperscalers such as Google or Amazon when starting their cloud computing journey. This option makes sense because many people may have worked with a preferred cloud computing platform in the past and know how it works. It is also not uncommon for the big providers to offer large amounts of free credits for startups to use on AI-related projects. Congrats if you got your credits—here is how you can use them!

Google Cloud Platform GPU Pricing

The Google Cloud Platform offers virtual machines for machine learning use cases through the Compute Engine solution. Each GPU that GCP offers has an internal model name, such as a3-highgpu-8g. You also have multiple international data center locations to choose from.

Instance name | GPU | Region | Price per Instance |

|---|---|---|---|

a3-highgpu-1g | H100 80GB | us-central1 | $11.06 |

a3-highgpu-8g | H100 80GB x8 | europe-west4 | $88.49 |

a2-ultragpu-1g | A100 80GB | europe-west4 | $6.25 |

a2-ultragpu-2g | A100 80GB x2 | europe-west4 | $12.49 |

a2-ultragpu-4g | A100 80GB x4 | europe-west4 | $24.99 |

a2-ultragpu-8g | A100 80GB x8 | europe-west4 | $49.98 |

a2-highgpu-1g | A100 40GB | europe-west4 | $4.05 |

a2-highgpu-2g | A100 40GB x2 | europe-west4 | $8.10 |

a2-highgpu-4g | A100 40GB x4 | europe-west4 | $16.02 |

a2-highgpu-8g | A100 40GB x8 | europe-west4 | $32.40 |

Currently, the NVIDIA H100 is offered by GCP on an 8-GPU instance from the us-central1 region for $88.49 per hour. The Google Cloud Platform has many different options to choose from for both 80GB and 40GB versions of the A100.

Google also offers older cloud GPU options, including the NVIDIA V100 Tensor Core GPU at a cost of $2.55 per GPU for up to 8x V100 instances. In a direct comparison, GCP was a bit more affordable than Amazon AWS and Microsoft Azure.

Instance name | GPU | Region | Price per GPU |

|---|---|---|---|

nvidia-tesla-v100-1g | V100 16GB | europe-west4-b | $2.55 |

nvidia-tesla-v100-2g | V100 16GB x2 | europe-west4-b | $5.10 |

nvidia-tesla-v100-4g | V100 16GB x4 | europe-west4-b | $10.20 |

nvidia-tesla-v100-8g | V100 16GB x8 | europe-west4-b | $20.40 |

For more information and the latest costs, see GCP Compute Pricing.

Amazon AWS Cloud GPU Pricing

Amazon offers a number of cloud GPUs through AWS EC2. Each virtual machine has its own Amazon model naming convention for instance types, e.g., “p5.48xlarge.” This AWS instance naming convention can take some time to decypher, so here is a quick cheat sheet of different options.

Instance Type | GPU | Region | Price per Instance |

p6-b200.48xlarge | B200 192GB x8 | us-east-1 | $113.93 |

p5en.48xlarge | H200 141GB x8 | us-east-1 | $63.30 |

p5e.48xlarge | H200 141GB x8 | Limited availability | ~$40-45 (Capacity Blocks only) |

p5.48xlarge | H100 80GB x8 | us-east-1 | $55.04 |

p4de.24xlarge | A100 80GB x8 | us-east-1 | Available (price reduced ~33%) |

p4d.24xlarge | A100 40GB x8 | us-east-1 | $21.95 |

p3.2xlarge | V100 16GB x1 | us-east-1 | $3.06 |

p3.8xlarge | V100 16GB x4 | us-east-1 | $12.24 |

p3.16xlarge | V100 16GB x8 | us-east-1 | $24.48 |

The B200 is listed at $113.93 for 8x192GB and the H200 has two versions, one with limited availability.The H100 is currently offered only in 8-GPU instances at a price of $55.04 per hour. AWS has only 40GB versions of the A100 listed for a price of $21.95 for a 8x GPU instance (the 80GB version is listed as Available with a price reduction).

Amazon has multiple options on offer for the V100 from 1x to 8x GPU instances across various AWS EC2 regions starting from $3.06 per GPU or $24.48 for an 8xV100 instance.

Microsoft Azure Cloud GPU Pricing

Microsoft Azure offers a number of high-performance cloud GPUs across different international data center locations.

Instance Name | GPU | Region | Price per Instance |

ND96isr H200 v5 | H200 141GB x8 | East US | $110.24 |

ND GB200 v6 | GB200 (4x B200 + 2x Grace CPU) | Limited Preview | Not Yet Public |

NC40ads H100 v5 | H100 80GB | East US | $6.98 per GPU |

NC80adis H100 v5 | H100 80GB x2 | East US | $13.96 per GPU |

ND96isr H100 v5 | H100 80GB x8 | East US | $98.32 |

NC24ads A100 v4 | A100 80GB | East US | $3.67 per GPU |

NC48ads A100 v4 | A100 80GB x2 | East US | $7.35 per GPU |

NC96ads A100 v4 | A100 80GB x4 | East US | $14.69 per GPU |

NC6s v3 | V100 16GB | East US | $3.06 per GPU |

NC12s v3 | V100 16GB x2 | East US | $6.12 per GPU |

NC24rs v3 | V100 16GB x4 | East US | $12.24 per GPU |

The H200 is listed at $110.24 for 8x141GB and the H100 is offered at $6.98 per GPU instance, while the A100 comes with a number of options ranging from $3.67 for a single GPU to $14.69 for 4xA100 instances. For the V100 there are also many options to choose from ranging from $3.06 per hour to $12.24 for a 4xV100 instance. In addition to Windows, you can run Ubuntu and other Linux OS on your Azure virtual machine instance. For up-to-date pricing information on Microsoft Azure, see Linux Virtual Machine Pricing.

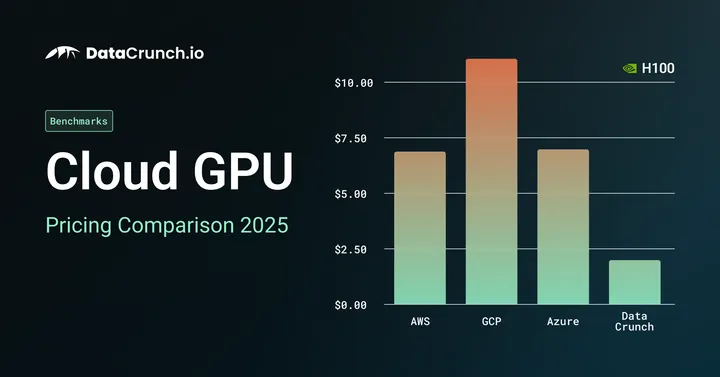

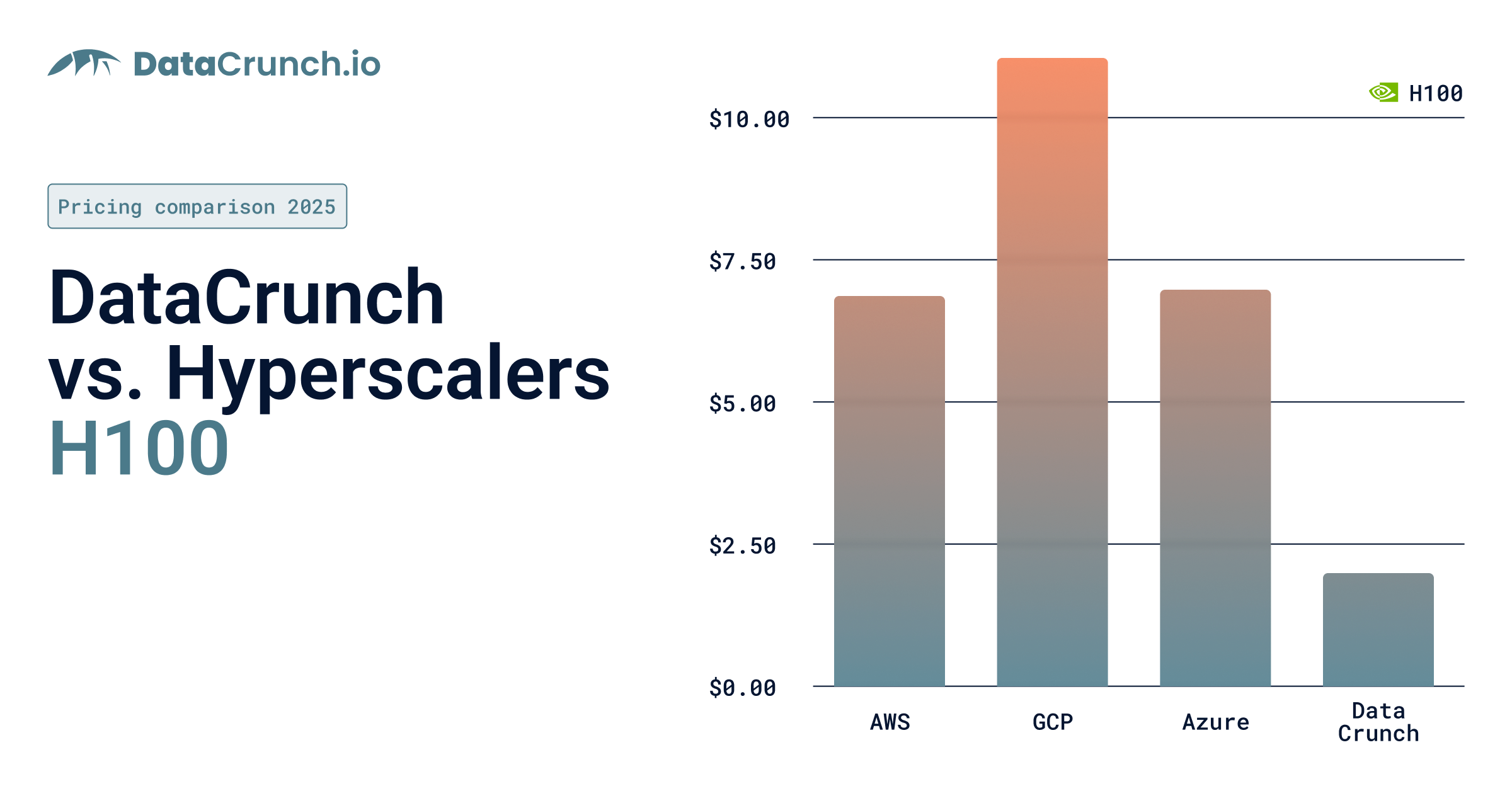

GCP vs. Amazon AWS vs. Azure GPU Cost Comparison

It is not easy to exactly compare Amazon AWS, Google Cloud Platform, and Microsoft Azure cloud GPU prices because there is not much overlap in the GPU instance offerings. All three solution providers do currently offer the V100 at similar prices, which Amazon AWS and Azure offering the exact same $3.06 per hour rate. As you can see below, DataCrunch offers a tremendous value for H100 instances compared to hyperscalers.

What are your other options?

In addition to Google, Amazon and Microsoft there are other options to consider in the Hyperscaler front. Enterprise IT companies like Oracle, IBM and HP all offer some level of cloud computing capacity. To get an accurate understanding of pricing you may need to contact them directly.

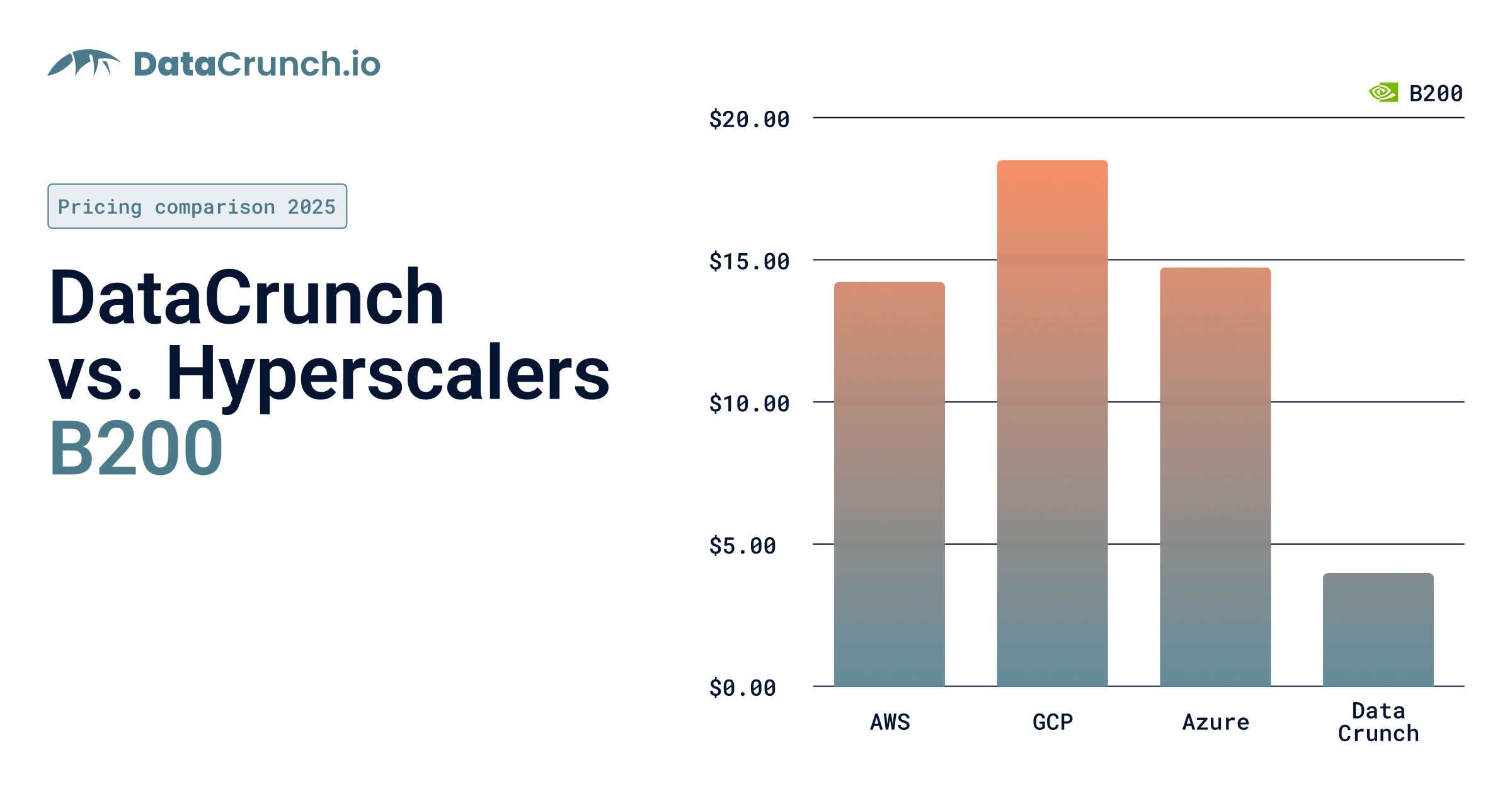

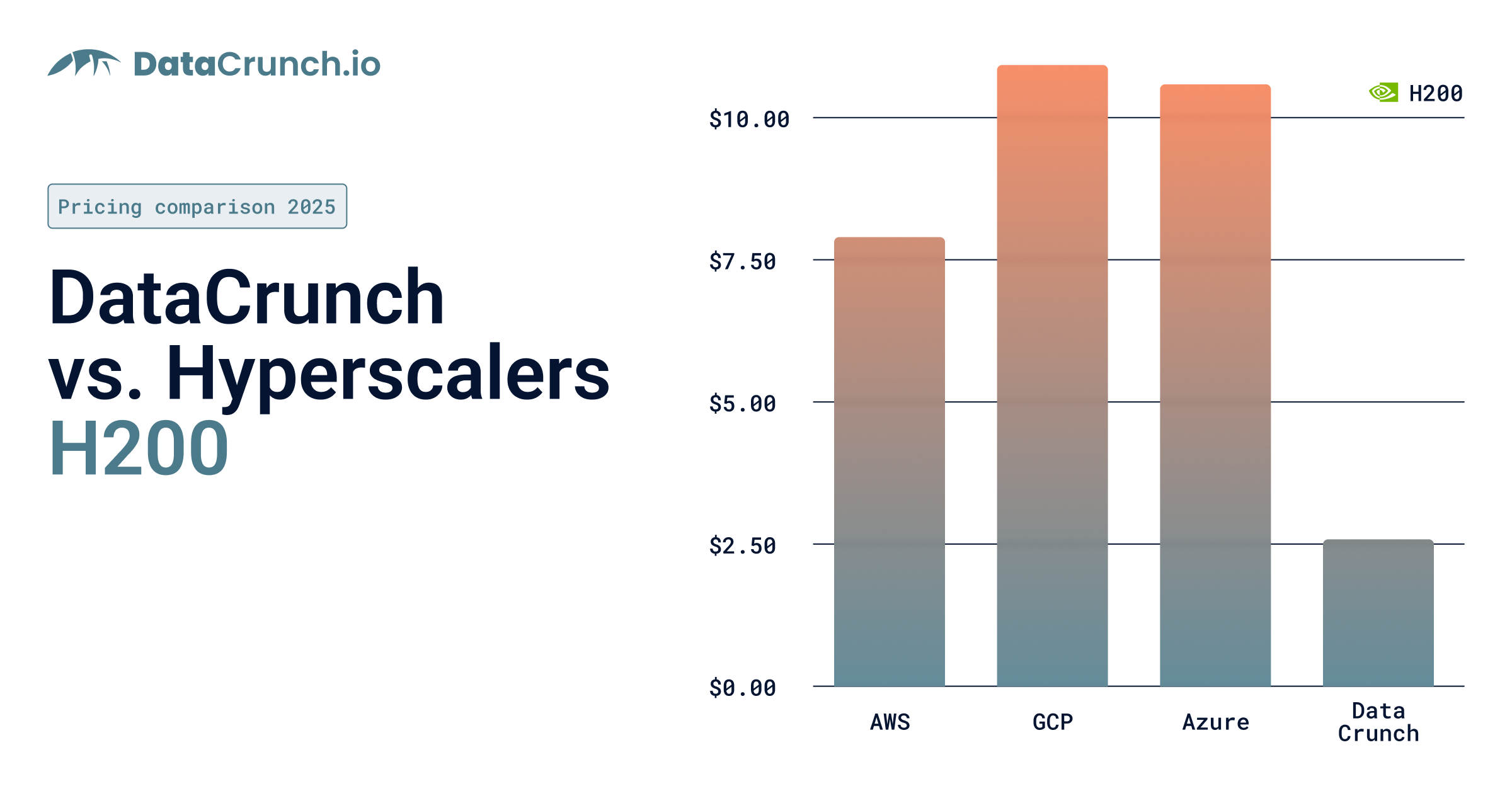

If you’re simply looking for a cost-effective option, you’re not likely to find the best deal from Amazon, Google, Microsoft or other hyperscalers. ML-focused cloud GPU providers like DataCrunch offer the same high performance GPUs up to 8x cheaper. For example, the current cost for the H100 on the DataCrunch Cloud Platform is just $1.99 per hour and the state-of-the-art B200 is available for $3.99/hour.

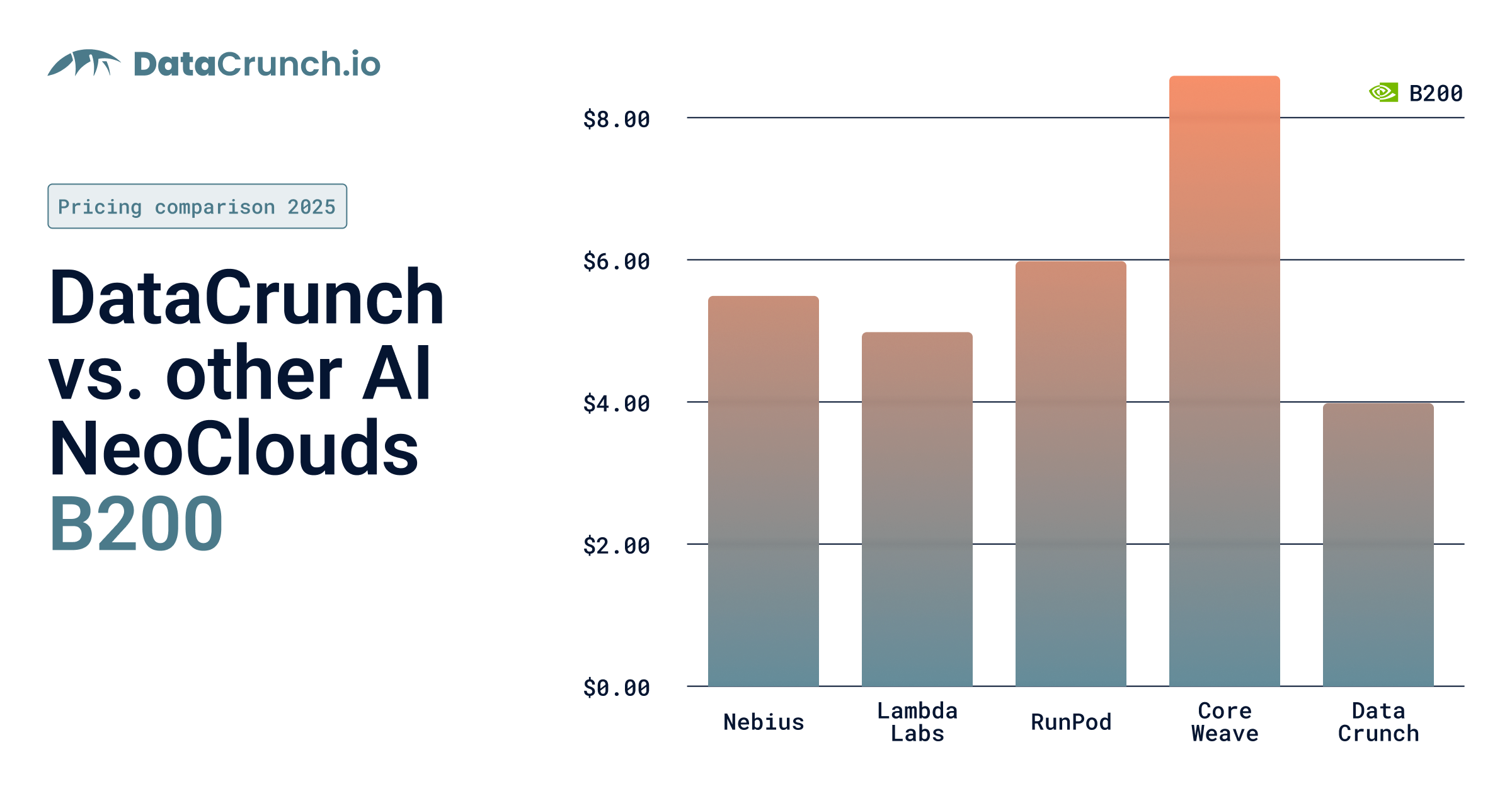

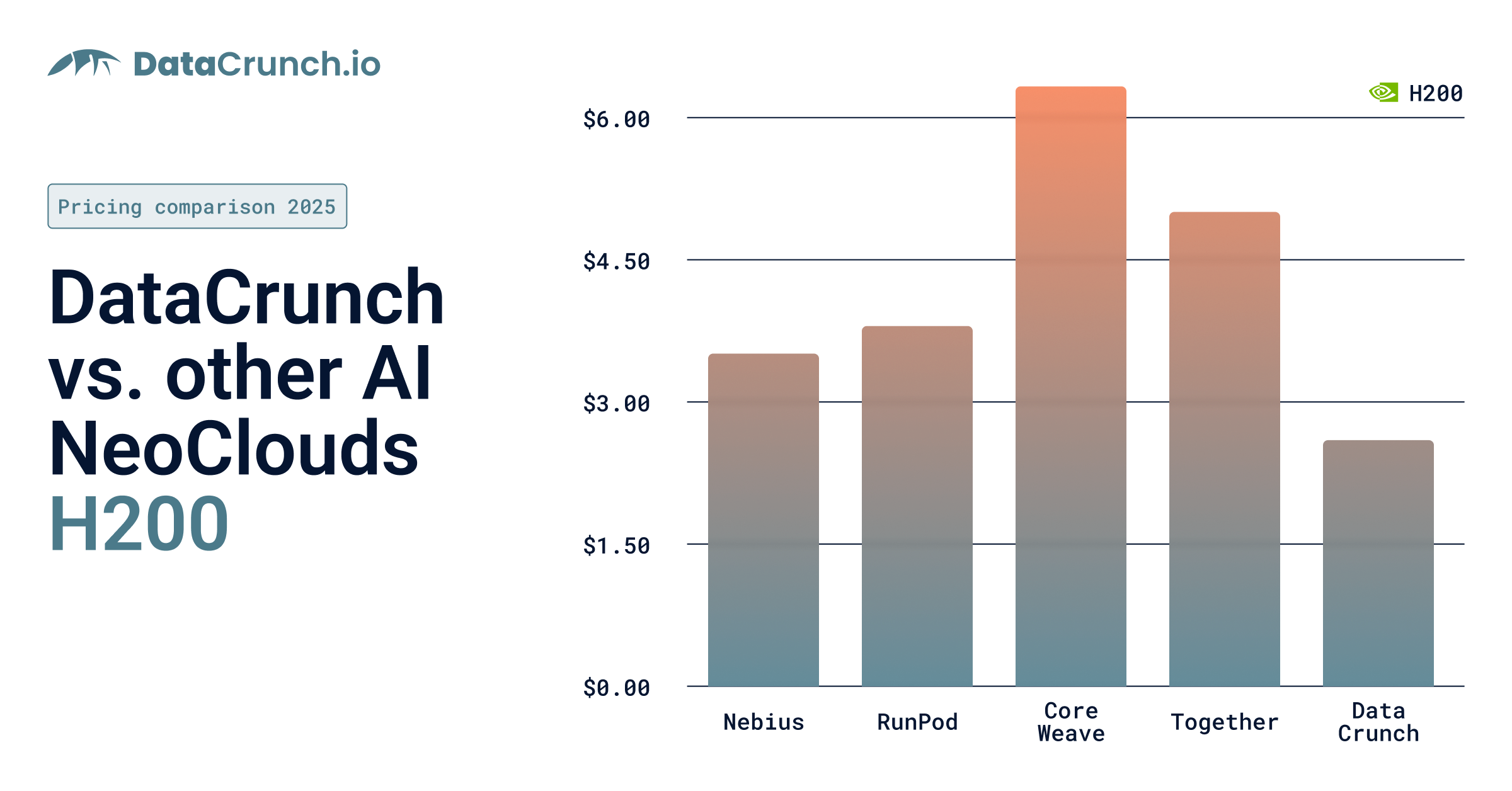

Here’s a comparison chart for newer NVIDIA GPU models B200 and H200 for hyperscalers.

Here's the current price list of DataCrunch single GPU instance models, with options up to 8x GPUs available.

GPU model | VRAM | Price per GPU/h |

B200 SXM6 | 180GB | $3.99 |

H200 SXM5 | 141GB | $2.59 |

H100 SXM5 | 80GB | $1.99 |

A100 SXM4 | 80GB | $1.16 |

RTX PRO 6000 | 96GB | $1.39 |

L40S | 48GB | $0.91 |

RTX 6000 Ada | 48GB | $0.83 |

RTX A6000 | 48GB | $0.47 |

Tesla V100 | 16GB | $0.14 |

Whether you need the fastest, latest GPUs or more mature models to match your performance and cost requirements, DataCrunch is your one-stop AI cloud for GPU instances, instant clusters, or bare-metal clusters.

NeoCloud Providers

If you’re looking beyond the hyperscalers, there are a number of established large cloud computing and hosting providers that provide GPU virtual machines. It’s a crowded field, and in addition to DataCrunch options include Nebius, Lambda, RunPod, Together, Coreweave, OVH, Paperspace and Scaleway. As you’ll see below, DataCrunch remains the best value when considering published list prices. However, we go beyond this to lower your actual spend through Dynamic pricing, spot pricing, and optimized, efficient GPU utilisation.

Beyond the financials, there are many other reasons to choose DataCrunch as your AI cloud provider.

RunPod Cloud GPU Pricing

RunPod is a small, emerging U.S.-based NeoCloud with affordable GPU instance and cluster access. They offer a wide range of GPU classes from NVIDIA and AMD, from recent releases to older-generation, across many price points. They also offer serverless containers. The prices below are for their Community Cloud instances for select models, with Secure Cloud (single tenant) instances slightly higher.

GPU Model | VRAM | RAM | vCPUs | Price (USD/hr) |

H200 SXM | 141GB | 276GB | 24 | $3.59 |

B200 | 180GB | 283GB | 28 | $5.98 |

H100 SXM | 80GB | 125GB | 20 | $2.69 |

A100 SXM | 80GB | 125GB | 16 | $1.39 |

L40S | 48GB | 94GB | 16 | $0.79 |

RTX 6000 Ada | 48GB | 167GB | 10 | $0.74 |

A40 | 48GB | 50GB | 9 | $0.35 |

RTX A6000 | 48GB | 50GB | 9 | $0.33 |

More information on RunPod pricing can be found here.

Nebius Cloud GPU Pricing

Nebius, formerly Yandex, is a medium sized NeoCloud based in Amsterdam whose investors include NVIDIA. Like CoreWeave, they are targeting large enterprise, having secured a large deal with Microsoft. They offer a broad product line from GPU instances to clusters, serverless, and managed Kubernetes.

GPU Model | RAM (GB) | Price Per GPU-hour (USD) |

NVIDIA GB200 NVL72* | 800 | Contact them |

NVIDIA HGX B200 | 200 | $5.50 |

NVIDIA HGX H200 | 200 | $3.50 |

NVIDIA HGX H100 | 200 | $2.95 |

NVIDIA L40S | 32-1152 | from $1.55 |

More information on Nebius pricing can be found here.

Lambda Cloud GPU Pricing

Lambda is a Silicon Valley based company which advertises easy to use 1-click clusters. Its complex configurations may challenge some users, but it offers a broad range of GPU compute infrastructure.

Instance | VRAM/GPU | PRICE/GPU/HR* |

Quadro RTX 6000 | 24 GB | $0.50 |

A10 | 24 GB | $0.75 |

A6000 | 48 GB | $0.80 |

A100 SXM | 40 GB | $1.29 |

GH200 | 96 GB | $1.49 |

H100 SXM | 80 GB | $3.29 |

B200 SXM6* | 180 GB | $4.99 |

*Note that the B200 is currently only available as an 8x GPU instance configuration.

More information on Lambda pricing can be found here.

Coreweave Cloud GPU Pricing

CoreWeave is one of the largest NeoClouds, having pivoted from their previous cryptocurrency mining business. They have raised significant capital, including $100 million from NVIDIA and a $1.5 billion IPO in 2025. As a result, their infrastructure can support thousands of GPU clusters and use cases including large scale training and inference. However, because of their focus on high-touch, large enterprise sales, their onboarding is not designed for quick, hassle free access to GPU clusters.

GPU Model | Instance Price (USD/hour) | GPU Count | $/GPU-hr |

NVIDIA B200 | 68.8 | 8 | $8.60 |

NVIDIA HGX H200 | 50.44 | 8 | $6.30 |

NVIDIA HGX H100 | 49.24 | 8 | $6.16 |

NVIDIA GB200 NVL72 | 42.0 | 4 | $10.50 |

NVIDIA A100 | 21.6 | 8 | $2.70 |

NVIDIA RTX PRO 6000 Blackwell Server Edition | 20.0 | 8 | $2.50 |

NVIDIA L40S | 18.0 | 8 | $2.25 |

NVIDIA GH200 | 6.5 | 1 | $6.50 |

More information on CoreWeave pricing can be found here.

OVHcloud Cloud GPU Pricing

OVHcloud is Europe’s largest cloud computing provider. With headquarters in France OVH has customers across the globe with data centers across multiple international locations. In addition to various CPU VM instances OVHcloud has on-demand instances of popular cloud GPUs available.

Instance Name | GPU | Price per hour |

|---|---|---|

h100-1-gpu | H100 80GB | $3.39 |

a100-1-gpu | A100 80GB | $3.35 |

t2-45 | V100 32GB | $2.19 |

52-90 | V100 32GB x2 | $4.38 |

t2-180 | V100 32GB x4 | $8.86 |

l40-1-gpu | L40S 48GB | $1.69 |

OVHcloud offers high performance NVIDIA instances in a number of different configurations and options. It is the only cloud GPU provider to offer the H100 at a lower hourly price point than the A100.

OVHcloud also has multiple instances of the V100 and L40S available in addition to dedicated servers and data hosting services.

Up-to-date pricing from OVH can be found here.

Paperspace Cloud GPU Pricing

Paperspace is a major cloud computing platform focused on deploying machine learning models owned by Digital Ocean, the web hosting company.

Paperspace offers Multi-GPU instances of the H100, A100 and V100. In addition, they offer various RTX-based GPUs on demand, including the RTX A6000, RTX 5000 and RTX 4000.

The current hourly price for an H100 GPU instance from Paperspace is $5.95, but they do offer discounts on multi-year commitments.

GPU | Price per hour |

|---|---|

H100 80GB | $5.95 |

H100 80GB x8 | $47.60 |

A100 80GB x8 | $25.44 |

V100 32GB | $2.30 |

V100 32GB x2 | $4.60 |

V100 32GB x4 | $9.20 |

RTX A6000 48GB | $1.89 |

More information on Paperspace pricing can be found here.

Scaleway Cloud GPU Pricing

Scaleway is another large web hosting and cloud computing company with headquarters in France and a presence in many international locations. Like OVHcloud Scaleway offer a broad range of virtual machines and cloud storage options, including cloud GPUs instances.

Instance Name | GPU | Price per hour |

|---|---|---|

H100-1-80G | H100 80GB | $2.73 |

H100-2-80G | H100 80GB x2 | $5.46 |

L40S-1-48G | L40S 48GB | $1.40 |

L40S-2-48G | L40S 48GB x2 | $2.80 |

L40S-4-48G | L40S 48GB x4 | $5.60 |

L40S-8-48G | L40S 48GB x8 | $11.20 |

Scaleway currently offers one of the lowest hourly prices for the H100 instance among large cloud GPU providers. In addition, it also has L40S and P100 instances on offer.

Scaleway also provides dedicated servers and bare metal solutions.

More information on latest Scaleway GPU pricing can be found here.

DataCrunch Cloud GPU Pricing

If you’re open to working with new cloud GPU providers, you should consider DataCrunch. We represent a new generation of AI-focused accelerated computing platforms built by AI engineers for AI engineers.

We only work with premium NVIDIA cloud GPUs and emphasise speed, value for money and efficiency in both our hardware and software solutions. In some cases, we’ve been able to reduce the cost of AI inference by 70% simply by getting more efficiency out of NVIDIA’s hardware stack. In a direct cost comparison our fixed cost hourly GPU instance rates are competitive.

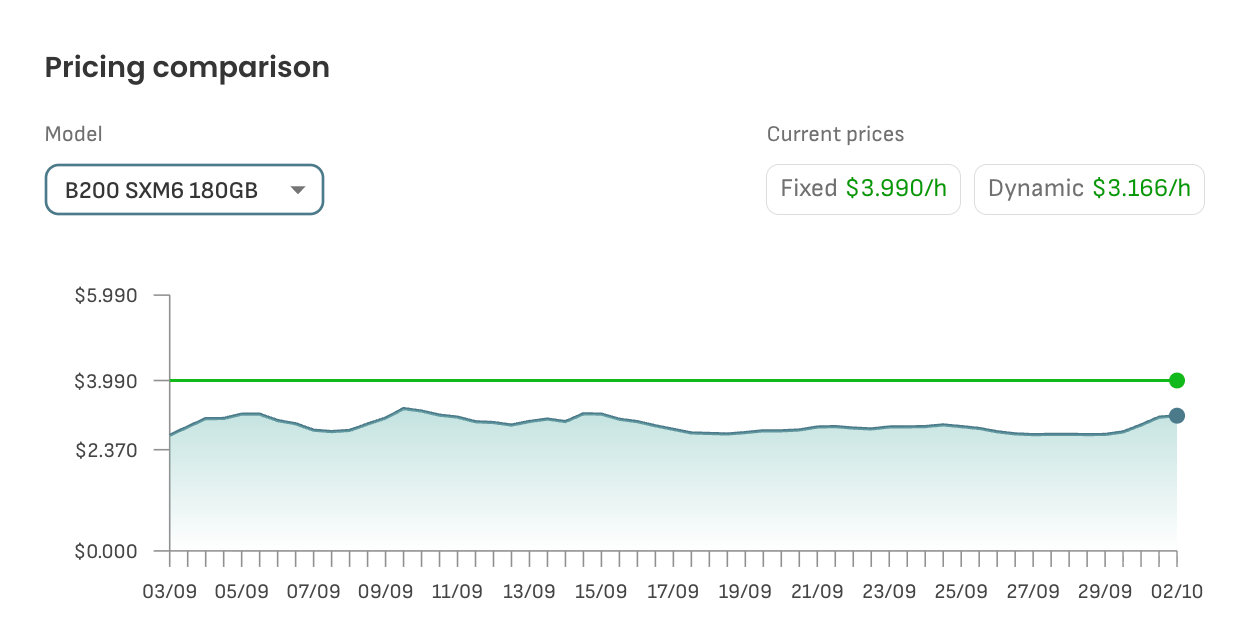

Not only that, we are the first cloud GPU platform to offer dynamic pricing - a new way to reduce the cost of AI-focused computing on our less-used inventory.

This is how dynamic pricing works. When we have spare capacity on our GPU models, like the B200SXM6 example below, we offer a discounted rate for hourly GPU instances. You can save up to 40% on the cost of GPU instances by picking dynamic pricing instead of a fixed price at off-peak times and GPUs with less market competition.

At DataCrunch, we don’t just stand out for our flexible and fair pricing. Our data centers utilize 100% renewable energy and enterprise-grade security. We’re often praised for the expert-level service we can provide customers. To learn more, visit our Why DataCrunch page or our FAQs.

The best way to see if DataCrunch is a good option for you is to spin up an instance on our intuitive and easy-to-use GPU Cloud Platform. To get an even better deal you can reach out to us to request a quote on bare metal clusters or serverless inference solutions.

All of the pricing and cost information provided here is accurate as of 15th of October 2025. If you find any errors, you can reach out to us on our chat, developer community, Reddit or X.

Third Party Pricing Example References Include: CloudPrice.net JarvisLabs ThunderCompute Modal